It is no secret that in the near future, Artificial Intelligence will be implemented in most technological devices. This is because Artificial Intelligence algorithms allow to predict and make decisions with a human-like reasoning for very specific tasks, reducing considerably the error and time used, and, therefore, greatly increasing the efficiency of the system which it implements.

There is a wide variety of fields of study where, due to factors such as the need for large memories and high processing capacities, among other technological limitations, no progress can be made. In many of these fields we can easily overcome these limitations thanks to Artificial Intelligence techniques.

[This time we are going to talk about the field of Artificial Intelligence that helps machines to understand, interpret and manipulate human language: Natural Language Processing (NLP).

To reach the state of the art of NLP, it has been necessary the development and innovation of algorithms which have allowed the rapid advancement of technologies in the field of Artificial Intelligence, to such an extent, that they have managed to develop applications which, using these algorithms, allow us to experience human-machine conversations with a fairly good quality of conversation.

These technologies allow us to identify the sentiment with which a sentence is expressed, to predict whether, given two sentences, the second has a contextual relationship with the first, or even to search for a semantic equivalence between the two sentences.

One of the technologies that, with greater reliability and accuracy allows to perform these tasks is the BERT system developed by GOOGLE in 2018.

Translated with www.DeepL.com/Translator (free version)

Process to BERT

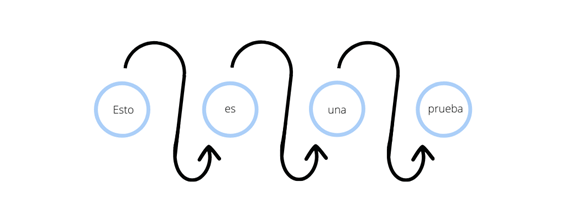

In the early days of NLP, Recurrent Neural Networks (RNN) were used. RNNs implement Deep Learning techniques to feed data, as the name suggests, in a recurrent manner (i.e., one after the other) into a neural network.

Let’s transfer this system to our field, how would it work?

Suppose we have a complete sentence, the sentence will be “This is a test”, and we need our neural network to process the sentence so that at the output we get, for example, a semantic idea of what our sentence means.

The first step our system performs is to separate the words in the sentence. Once the words have been separated, we start the recurrence process, that is, we introduce only the first word as input in the neural network. When we obtain the result of the neural network to the word “This”, we add this result to the next word “is” and introduce it again as input in the same neural network. The new result obtained, as we can predict, takes into account the first two words, “This is”. In this way we continue the recurrence process until the last word, having then processed the entire sentence, taking into account each and every word of the sentence.

At first glance everything seems correct, but this type of network has two major problems:

The first is trivial, and is that, being a recurrent, as we have already seen, it takes the processing of the word “This” to start processing the word “is”, and so on, adding an amount of time to the final processing as large as words have the sentence. This means that they are generally slow algorithms.

The second big problem is that these systems “forget” the long sentences, so that in the final calculation, the network does not take into account the initial words. In this case, and assuming that the sentence is sufficiently long, the network is unable to take into account the word “This” in the final calculation, so there is a variation in the final meaning of the sentence.

The solution to this problem would come with the famous paper “Attention is all you need”, where the RNNs are completely replaced by attention mechanisms.

And what are attention mechanisms?

They are architectures which allow processing the sentence word by word, in parallel, solving the problem of processing time and the problem of lack of memory that the RNNs had.

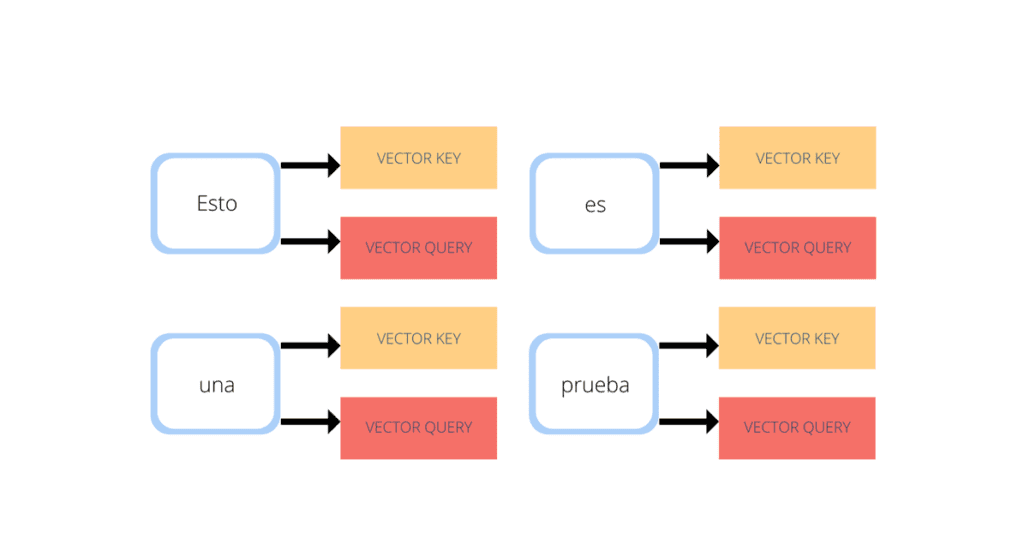

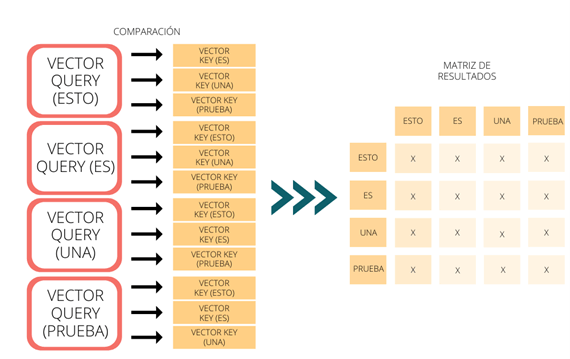

This is achieved in a very simple way. Each word of the same sentence is fed as input to two different neural networks. The first neural network specifies what the meaning of the word is, and the second neural network specifies what type of words it seeks to relate to. Therefore, each word in the sentence will contain two values. Its meaning, called key vector, and its needs, called query vector, so that in the above example it would look like this.

Once we have these results, with a simple calculation we can obtain the correlation that each word has with the rest of the phrase. This can be achieved by comparing what each word searches for (query vector), with what the rest of the words really are (key vector), giving a match when both are compatible. To relate all the words to each other, the query vector of a word is compared with the key vector of the rest of the words, repeating this process for each of the words in the phrase.

Each of the results obtained in the comparisons are stored in a results matrix, following the previous example the system as follows (X will be the value of the correlation between the word represented by the row and the column to which it belongs).

BERT

Bidirectional Encoder Representations from Transformers, BERT, relies on Transformer technology to work. Its great power is its versatility, as it can be used to solve many tasks, why is this?

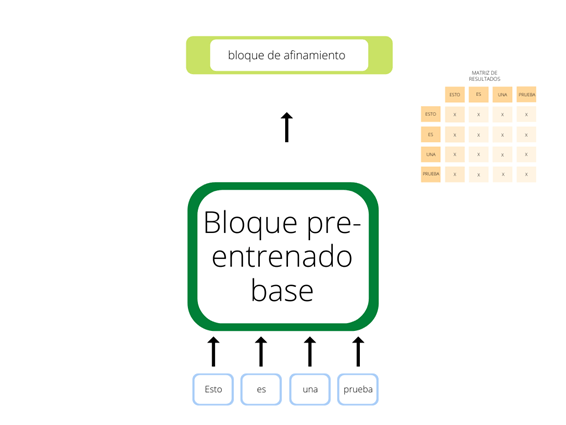

This is due to its internal architecture. BERT contains two major blocks. The first is a general pre-training block based on Transformer networks, which allows the basic understanding of the language, and the second block, concatenated to the previous one, allows tuning the operation of the system through Deep Learning, depending on the final need. This second block is the one that allows this great versatility, since it is a small block with great potential that relies on an already trained block that supports the basic recognition of the language.

With this matrix of results we can consider the importance of each word with respect to the rest, allowing the machine to understand the context of the sentence.

Therefore, from this paper arise the new great Artificial Intelligence systems for NLP, the Transformers.

It was a matter of time before the development of Artificial Intelligences based on the Transformer technology, being BERT one of the most promising ones.

Para entender esto, nos podemos imaginar a un universitario en su primer año, el cual tiene que superar un examen de integración. Este alumno debe aprender a integrar, pero esta finalidad va a ser infinitamente más sencilla si tiene una base de derivación. En esta analogía el alumno es la Inteligencia Artificial, el aprendizaje de integración es el segundo bloque de afinamiento y la base de derivación corresponde con el bloque pre-entrenado base.

Es importante entender como con la misma base de derivación, ajustando el bloque de afinamiento podríamos hacer que el alumno aprendiese a calcular la aceleración de un sistema de movimiento (ya que es la derivada de la velocidad con respecto al tiempo) o a calcular intensidades de corriente en circuitos complejos (siendo la intensidad la derivada de la carga eléctrica respecto al tiempo).

En conclusión, podemos ver como la Inteligencia Artificial no defrauda en su promesa de cambiar el mundo tal como lo conocemos. Especialmente en el ámbito del NLP hemos visto como su avance en los últimos años ha permitido el desarrollo de algoritmos, que gracias a los Transformers son realizables con la tecnología que tenemos hoy en día.

BERT ya es implementado en grandes proyectos, tanto académicos como industriales, y solo basta con una búsqueda rápida por internet para entender la trascendencia de estos sistemas en nuestra sociedad, habilitando grandes comodidades y necesidades, desde permitir la comunicación a personas que por motivos médicos no pueden hablar, al más puro estilo Stephen Hawking, a la comodidad de nuestra casa, entablando una discusión con nuestro asistente de voz sobre si debería llevar hoy al trabajo ropa más o menos abrigada.

[*] Desde Teldat hemos pensado que sería interesante tener blogueros externos, y así ampliar el espectro de información que se transmite desde Teldat Blog. Esta semana, Ivan Castro, estudiante de Universidad Alcalá de Henares, nos escribe sobre BERT.

To understand this, we can imagine a university student in his first year, who has to pass an integration exam. This student must learn to integrate, but this goal will be infinitely easier if he has a basis of derivation. In this analogy, the student is the Artificial Intelligence, the integration learning is the second tuning block and the derivation base corresponds to the pre-trained base block.

It is important to understand how with the same derivation base, adjusting the tuning block we could make the learner learn to calculate the acceleration of a motion system (since it is the derivative of the velocity with respect to time) or to calculate current intensities in complex circuits (the intensity being the derivative of the electric charge with respect to time).

In conclusion, we can see how Artificial Intelligence does not disappoint in its promise to change the world as we know it. Especially in the field of NLP we have seen how its progress in recent years has allowed the development of algorithms, which thanks to the Transformers are achievable with the technology we have today.

BERT is already implemented in large projects, both academic and industrial, and it only takes a quick internet search to understand the importance of these systems in our society, enabling great comforts and needs, from allowing communication to people who for medical reasons cannot speak, in the purest Stephen Hawking style, to the comfort of our home, engaging in a discussion with our voice assistant about whether we should wear warmer or less warm clothes to work today.

[*] At Teldat we thought it would be interesting to have external bloggers, and thus broaden the spectrum of information that is transmitted from Teldat Blog. This week, Ivan Castro, a student at the University of Alcala de Henares, writes about BERT.